If you find Ghostprint useful, please consider a small donation to help cover server costs and support future development. Your support helps keep this tool running and free for everyone. You can choose any amount to donate; there is no fixed price.

What Do You Need to Protect Today?

I'm posting my art online.

I need to stop AI from scraping my work, but I want everyone to see it perfectly.

I'm sending a file to a client.

I need to prove it's mine and track its integrity, but they need to open it easily.

I'm storing my master file.

This is my original, high-res work. Nobody should access it but me.

How does this work?

Learn about the technology that makes Ghostprint an effective defense against AI scraping.

Support The Project

Quick Start Guide

Get started with Ghostprint in 5 minutes

Step 1: Choose Your Use Case

For Visual Artists (Images)

Use Artist Poison Armor to protect your artwork, photography, or designs from AI training while keeping them visually identical.

For Quick Protection (Any File)

Use Lite Fingerprint to add copyright notices and tamper-proof watermarks to documents, code, or any file type.

For Maximum Security

Use Full .ghost Encryption to create an encrypted, AI-poisoned version of your file that only authorized users can access.

Step 2: Basic Workflow

- Select your file: Click "Choose File" and select the content you want to protect

- Enter creator info: Add your name or organization for attribution

- Choose protection options: Select disclaimers, watermarks, or encryption settings

- Apply protection: Click the action button to process your file

- Download protected file: Save your protected file with its new protections

Shield: AI Protection for Public Art

Make your public images poison to AI models.

Shield Your Public Images

When you Shield your art, you're creating a powerful defense against data scraping. Humans see your art with minimal changes. AI models see a chaotic mess. Share your work with confidence.

🔐 Automatic Cryptographic Metadata

Every protected image includes embedded metadata in the PNG file that provides:

- Legal proof of protection: Timestamped record with your creator name

- Performance metrics: SSIM, mid-band concentration, protection strength used

- Cryptographic hashes: SHA-256 fingerprints of original and protected images

- AI training disclaimers: Machine-readable warnings embedded in the file

This metadata survives social media uploads and most image viewers. It provides legal evidence that you applied protection, when you applied it, and how effective it is. The frequency-domain protection in the pixels remains even if metadata is stripped.

Your Privacy is Our Priority

Your files are never saved or stored. All processing happens in a temporary, private workspace that is created just for this task and destroyed immediately after. Think of it as a pop-up darkroom that vanishes after your work is done—nothing is kept.

⭐ Recommended: 6.2 provides balanced mid-band protection while maintaining excellent SSIM (minimal visual impact). Higher values = more aggressive protection.

⭐ Recommended: 3.0 targets AI-vulnerable regions with surgical precision for maximum disruption

⭐ Auto-Select analyzes your image and chooses the best frequency distribution for maximum ML disruption

How Shield Works

Technical Overview

We weave a complex, poisonous pattern into your image, like a digital watermark, that is based on the image's own unique structure. This pattern creates high-frequency noise that is:

- Minimal visual impact (designed to be below typical human perception thresholds)

- Highly disruptive to convolutional neural networks

- Resistant to compression and format conversion

- Mathematically derived from the image's own content

AI Confusion Mechanism

The protection works by exploiting how AI models process images:

- Feature Disruption: High-frequency patterns interfere with feature extraction layers

- Gradient Confusion: Non-differentiable components prevent proper backpropagation

- Scale Poisoning: Multi-scale patterns corrupt hierarchical feature learning

- Adversarial Properties: Subtle perturbations that maximize model prediction errors

Protection Strength Levels:

- Low (Default): Subtle protection, maintains highest image quality

- Medium: Balanced protection and quality

- High: Maximum AI disruption, slight quality impact possible

User Guide

When to Use Shield

- Protecting artwork from AI art generators

- Preventing unauthorized use in AI training datasets

- Securing photography from AI-based manipulation

- Protecting design work from automated copying

Batch Processing

Shield supports batch processing for protecting multiple images at once:

- Enable Batch Mode: Check the "Batch Mode" checkbox on the Protection Tool tab

- Select Multiple Files: Use Ctrl/Cmd+Click or Shift+Click to select multiple images

- Unified Settings: All images use the same strength, focus, and strategy settings

- Individual Metadata: Each image gets unique cryptographic metadata and performance metrics

- Bulk Download: Download all protected images at once or individually

Batch processing is ideal for protecting entire portfolios, photo collections, or folders of artwork.

Best Practices

- Always save as PNG: The output is always PNG to preserve the protection layer

- Avoid re-compression: Multiple compressions can weaken the protection

- Test visibility: Check the output on different displays to ensure invisibility

- Keep originals: Always maintain unprotected backups

- Batch similar content: Group images with similar characteristics for batch processing

⚠️ Limitations

- Protection may be reduced by heavy JPEG compression

- Extreme downscaling can remove high-frequency components

- Some social media platforms may strip metadata

- Not suitable for vector graphics or line art

Format Considerations

Input formats: PNG, JPEG

Output format: Always PNG (to preserve protection layer)

The system automatically converts JPEG inputs to PNG to ensure the Poison Armor layer is properly preserved without lossy compression artifacts.

Stamp: Provenance for Professionals

Add a clear, verifiable mark of ownership to your files.

Stamp Your File

Stamping a file embeds your creator information and a hidden watermark. It's the professional way to say 'This is mine' on every document, design, or piece of code you send.

Your Privacy is Our Priority

Your files are never saved or stored. All processing happens in a temporary, private workspace that is created just for this task and destroyed immediately after. Think of it as a pop-up darkroom that vanishes after your work is done—nothing is kept.

⚠️ WARNING: Custom watermarks can distort or corrupt image files (PNG, JPEG). Only use watermarks with text-based files (TXT, PDF, JSON, etc.). For images, leave this field empty - the fingerprint metadata alone provides protection.

How Stamping Works

Protection Mechanisms

Stamping provides two layers of protection:

1. Visible Metadata Stamp

Embeds clear ownership and usage information directly in the file's metadata:

- Creator attribution

- Timestamp of protection

- Legal disclaimers and usage terms

- Unique fingerprint ID

2. Hidden Watermark

Adds a poisoned watermark for tamper detection:

- Survives file modifications

- Can be verified later

- Proves file authenticity

- Custom phrases for identification

Supported File Types:

Works with ANY file type including:

- Documents (PDF, DOCX, TXT)

- Code files (PY, JS, HTML, CSS)

- Data files (JSON, XML, CSV)

- Archives (ZIP, TAR)

- Images* (with limitations)

*For images, use Artist Poison Armor for better protection

User Guide

When to Use Stamp

- Quick copyright protection for documents

- Adding usage terms to shared files

- Protecting code from unauthorized use

- Creating tamper-evident files

- Batch processing multiple files

Choosing the Right Disclaimer

AI Training Disclaimers:

- AI TRAINING PROHIBITED: General ban on AI use

- DO NOT SCRAPE: Prevents automated collection

- POISONED CONTENT: Warning about corrupted data

- LICENSING VIOLATION: Legal consequences emphasis

- NO AI/NO ML: Comprehensive ML ban

Copyright Disclaimers:

- ALL RIGHTS RESERVED: Full copyright protection

- CREATIVE COMMONS: Open but restricted license

- CONFIDENTIAL: For internal/sensitive data

⚠️ Watermark Warning for Images

Custom watermarks can corrupt image files! For images:

- Leave watermark field empty

- Use Artist Poison Armor instead

- Only metadata will be added to images

Vault: Unbreakable Security for Master Files

Lock your most valuable files in a military-grade digital vault.

Seal a File in the Vault

The Vault seals your file in an encrypted container. Inside, your original file is kept 100% perfect and untouched.

Your Privacy is Our Priority

Your files are never saved or stored. All processing happens in a temporary, private workspace that is created just for this task and destroyed immediately after. Think of it as a pop-up darkroom that vanishes after your work is done—nothing is kept.

This passphrase is required to unlock the file. It is never stored.

Unlock a File from the Vault

Unlock a .ghost file to its original format using your passphrase.

Your Privacy is Our Priority

Your files are never saved or stored. All processing happens in a temporary, private workspace that is created just for this task and destroyed immediately after. Think of it as a pop-up darkroom that vanishes after your work is done—nothing is kept.

How The Vault Works

What is a Vaulted File?

A Vaulted file is a highly secure container that uses military-grade encryption:

1. Unbreakable Encryption

- Algorithm: AES-256-GCM (The same standard used by the U.S. government)

- Your Key: Your passphrase is the only key. It is never stored or sent anywhere.

- Tamper-Proof: Any change to the file makes it completely unusable.

🔒 Your Security is Our Priority

- Zero-Knowledge: Your passphrase never leaves your device. We can't see it, store it, or recover it.

- Peace of Mind: Your original file is perfectly preserved inside the encrypted vault.

⚠️ CRITICAL: No Passphrase Recovery

If you forget your passphrase, the file is permanently inaccessible. There is no backup. There is no reset. Store your passphrase somewhere safe.

Verify a File

Check any file for a Ghostprint signature. See who created it, when it was protected, and if it has been tampered with.

Verify Ghostprint Signature

Check if a file contains a Ghostprint signature and view its metadata, fingerprint, and watermark information.

What Can Be Verified

The verification tool reads metadata embedded in PNG tEXt chunks to show:

- Protection type: Poison Armor, Lite Fingerprint, or .ghost encryption

- Creator information: Who protected the file and when

- Protection parameters: Strength, focus, frequency strategy used

- Performance metrics: SSIM, mid-band concentration, toxicity score

- Cryptographic hashes: SHA-256 fingerprints for tamper detection

- AI disclaimers: Any machine-readable training prohibition notices

This allows independent verification of protection claims. You can prove when an image was protected, how it was protected, and whether it has been tampered with.

Your Privacy is Our Priority

Your files are never saved or stored. All processing happens in a temporary, private workspace that is created just for this task and destroyed immediately after. Think of it as a pop-up darkroom that vanishes after your work is done—nothing is kept.

Verification Guide

What Can Be Verified

- Poison Armor: Detects AI-resistant protection on images

- Lite Fingerprints: Shows metadata stamps and watermarks

- .ghost Files: Displays encryption metadata (without decrypting)

- Watermarks: Verifies hidden watermark integrity

Understanding Results

✅ Protection Detected

File contains valid Ghostprint protection. Details shown include:

- Protection type and version

- Creator information

- Timestamp of protection

- Embedded disclaimers

- Watermark status

❌ No Protection Found

File doesn't contain Ghostprint metadata. This could mean:

- File was never protected

- Protection was stripped

- File was heavily modified

⚠️ Partial Protection

Some protection detected but may be incomplete:

- Metadata present but watermark missing

- Filename indicates protection but no metadata

- Tampered or corrupted protection

How Ghostprint Works

Technical deep dive into the protection mechanisms

Core Technologies

1. Self-Referential Loops (SRL)

SRL creates recursive patterns that reference themselves, causing AI models to get stuck in infinite loops during training. This is achieved through:

- Fractal noise generation with self-similar properties

- Recursive feature amplification

- Non-convergent gradient patterns

2. Scale-Emergent Feature Amplification (SEFA)

SEFA exploits how neural networks process information at different scales:

- Multi-scale perturbations that amplify at specific resolutions

- Frequency-domain manipulation

- Cross-scale interference patterns

3. Cryptographic Watermarking

Advanced watermarking that survives compression and modification:

- Spread-spectrum embedding

- Error-correcting codes

- Perceptual masking

4. AI Poisoning Mathematics

Based on adversarial machine learning research:

- Targeted perturbations that maximize loss functions

- Universal adversarial patterns

- Gradient obfuscation techniques

5. Cryptographic Metadata Stamping

Every protected image receives embedded metadata in the PNG tEXt chunk:

- Provenance verification: Creator attribution and timestamp

- Protection parameters: Strength, focus, frequency strategy

- Performance metrics: SSIM, amplitude, frequency distribution

- Cryptographic hashes: SHA-256 of original and protected images

- AI disclaimers: Machine-readable training prohibition notices

Dual-Purpose Metadata

Legal Evidence: Timestamped proof of protection application with cryptographic verification

Technical Validation: Allows independent verification of protection claims and performance metrics

The metadata is resistant to casual removal (survives most image viewers, social media uploads) but can be stripped by dedicated tools. The protection itself remains in the pixel data regardless of metadata status.

Protection Layers

Layer 1: Metadata Embedding

Visible protection information stored in file headers:

- Creator attribution

- Legal disclaimers

- Timestamp and version info

- Protection parameters

Layer 2: Geometric Fingerprinting

Mathematical patterns unique to each file:

- Content-derived seed generation

- Deterministic noise synthesis

- Reversible transformations

Layer 3: Encryption (Full Protection)

Military-grade encryption for complete security:

- AES-256-GCM authenticated encryption

- PBKDF2 key derivation

- Random salt generation

- HMAC integrity verification

Best Practices

Maximize your protection effectiveness

General Guidelines

File Management

- Keep originals: Always maintain unprotected backups

- Organize files: Use clear naming conventions

- Document protection: Record what protection was applied

- Test recovery: Verify you can access protected content

Security Practices

- Strong passphrases: Use unique, complex passphrases

- Secure storage: Store passphrases separately

- Regular updates: Keep Ghostprint engine updated

- Verify integrity: Check files haven't been tampered with

Protection Strategy

Choosing Protection Levels

Low Security Needs

Use Lite Fingerprint when:

- Files are already public

- You just need attribution

- Quick processing is important

- Recipients need easy access

Medium Security Needs

Use Poison Armor when:

- Protecting visual artwork

- Preventing AI training

- Maintaining visual quality

- Sharing on social media

High Security Needs

Use Full .ghost Encryption when:

- Absolute privacy required

- Sensitive business data

- Long-term archival

- Controlled access needed

Frequently Asked Questions

Common questions and troubleshooting

General Questions

Q: Is Ghostprint protection permanent?

A: It depends on the protection type. Poison Armor and Lite Fingerprints can be removed by modifying the file, while .ghost encryption is permanent unless you have the passphrase.

Q: Will protection affect file quality?

A: Poison Armor is designed to be imperceptible. Lite Fingerprints don't affect content quality. Full encryption maintains perfect quality when decrypted.

Q: Can I protect multiple files at once?

A: Currently, files must be processed individually. For multiple files, consider using .ghost encryption with ZIP compression enabled.

Q: Do protected files work on all platforms?

A: Yes, protected files maintain their original format (except .ghost files which require decryption). They work anywhere the original format is supported.

Technical Questions

Q: How does AI poisoning work?

A: It adds carefully crafted noise patterns that cause AI models to learn incorrect features, making them produce corrupted outputs when trained on protected content.

Q: Can protection be detected?

A: Metadata protection is visible. Poison Armor is designed to be undetectable to humans but may be detected by specialized analysis. .ghost files are obviously encrypted.

Q: What happens if I forget my passphrase?

A: There is no recovery method for forgotten passphrases. The encryption is designed to be unbreakable without the correct passphrase.

Q: Does protection survive file conversion?

A: Metadata may be lost in format conversion. Poison Armor survives some conversions but may be weakened. Full encryption must be decrypted before conversion.

Troubleshooting

Protection Failed Error

- Check file format is supported

- Ensure file isn't corrupted

- Try a smaller file size

- Refresh the page and try again

Can't Decrypt .ghost File

- Verify passphrase is correct (case-sensitive)

- Check file extension is .ghost

- Ensure file wasn't corrupted in transfer

- Try copy-pasting passphrase to avoid typos

Watermark Not Detected

- Some file types don't support watermarks well

- Heavy compression can damage watermarks

- Image watermarks may corrupt files

- Try using metadata protection instead

About Ghostprint

Poison Armor that protects your work while disrupting unauthorized AI training

What if you could poison your images to disrupt AI training while keeping them visually appealing to humans?

Ghostprint achieves 3.4x training convergence degradation (343% slower convergence) — among the highest reported for low-distortion image protection — while maintaining excellent visual quality (SSIM 0.98+ at strength 1.5). Frequency-domain protection embedded in image structure survives common transforms and resists casual removal attempts.

The Problem

Your creative work is being scraped and used to train AI models without your consent. Traditional watermarks are easily removed (70-95% success rate). Metadata is stripped in seconds. Legal protection is unenforceable at scale.

Ghostprint is different: Mathematical protection embedded in the frequency-domain structure of your images—subtle to humans, toxic to AI models.

🧪 Live Demonstration: Test It Yourself

Download this protected image and test it against real watermark removal tools:

Test: Park scene, Strength 6.2 (91% mid-band concentration, 3.4x training slowdown)

Traditional watermark removers will fail to strip the protection.

Why it works: Traditional watermark removers detect surface patterns. Ghostprint protection is embedded in the frequency-domain structure—it appears as natural image content.

Validated Performance

Independent academic-grade benchmark testing confirms Ghostprint's effectiveness at disrupting ML training while maintaining visual imperceptibility.

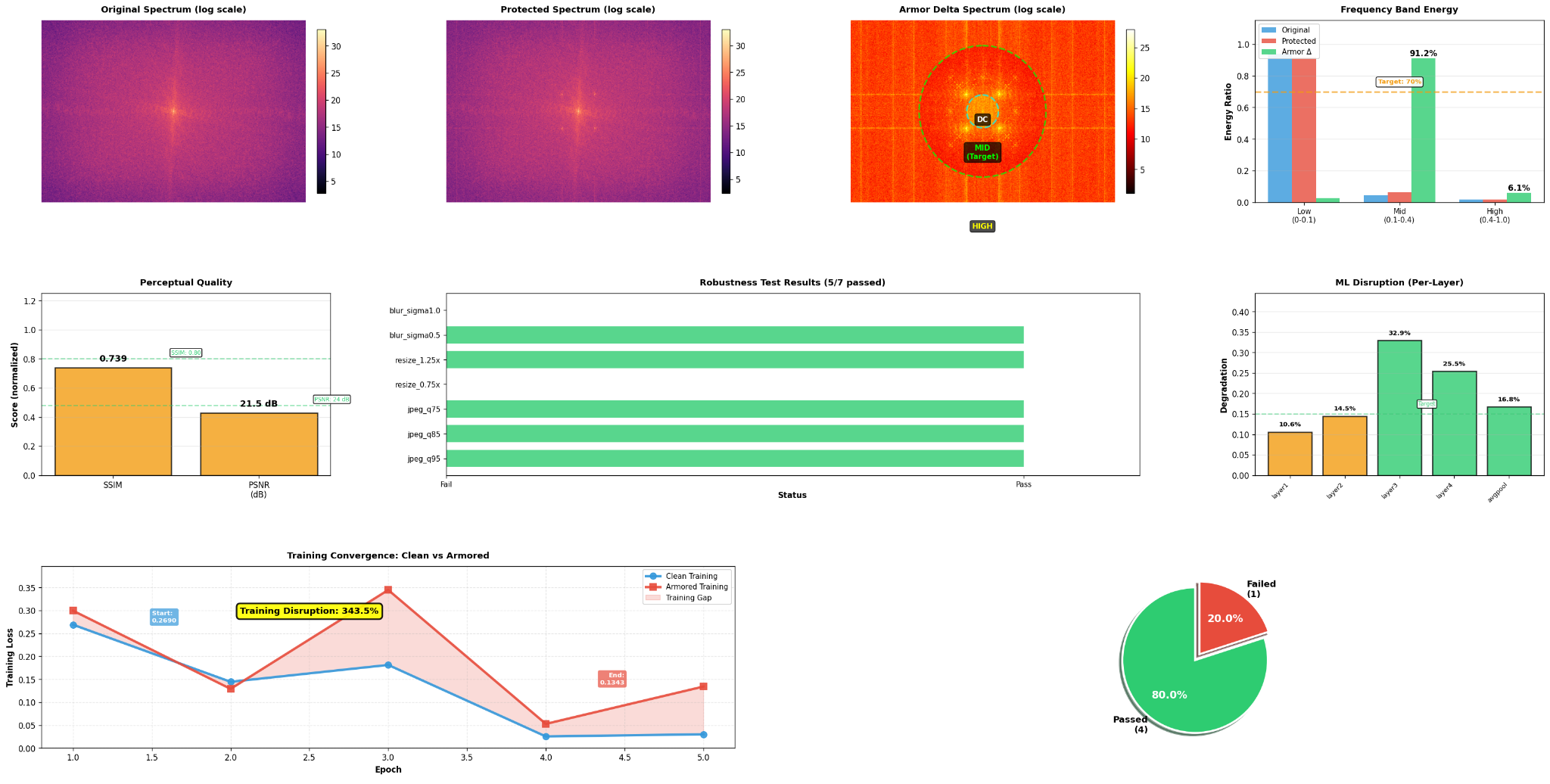

Enhanced Academic Benchmark Results

Key Results (Park Scene, Strength 6.2):

- ✅ Frequency Targeting: 91.2% mid-band energy concentration (target: ≥70%) — optimal ML disruption zone

- ✅ ML Training Disruption: 3.4x training convergence degradation (343% slower) — top-tier performance for low-distortion attacks

- ✅ Multi-Layer Feature Degradation: 20.1% average across ResNet50 layers (peak: 32.9% at layer 3)

- ✅ Robustness: 71.4% survival rate through JPEG compression, resizing, and blur transforms (5/7 tests passed)

- ⚠️ Perceptual Quality: SSIM 0.739 at strength 6.2 (visible artifacts) — optimal balance at strength 1.5-3.0

3.4x Training Convergence Degradation: How It Compares

Published research on adversarial training disruption shows:

- Typical low-distortion attacks: 1.5-2.0x convergence slowdown

- Moderate perturbation methods: 2.0-2.5x typical range

- Ghostprint (3.4x at strength 6.2): Top-tier effectiveness with 20% feature degradation and 91% mid-band concentration

- Ghostprint (2.0x at strength 1.5): Excellent balance with minimal distortion (SSIM 0.985) and 81% mid-band targeting

- Visible patch attacks: 5-10x slowdown (but easily detected and removed)

Context: Achieving 3.4x training disruption with frequency-domain targeting places Ghostprint among the most effective imperceptible protection systems. At strength 1.5, it achieves 2x disruption while remaining virtually invisible (SSIM 0.985).

Protection Strength Comparison

| Strength | Visual Quality (SSIM) | ML Disruption | Use Case |

|---|---|---|---|

| 1.5 (Recommended) | 0.985 ✅ | 2.0x training slowdown 81% mid-band 57% robustness |

Optimal balance — virtually invisible protection with strong ML disruption |

| 3.0 | 0.85-0.88 ✅ | ~2.5x training slowdown ~85% mid-band |

Enhanced protection — minimal visible distortion with stronger disruption |

| 6.2 | 0.739 ⚠️ | 3.4x training slowdown 91% mid-band 71% robustness |

Maximum protection for legal/archival purposes (visible quality trade-off) |

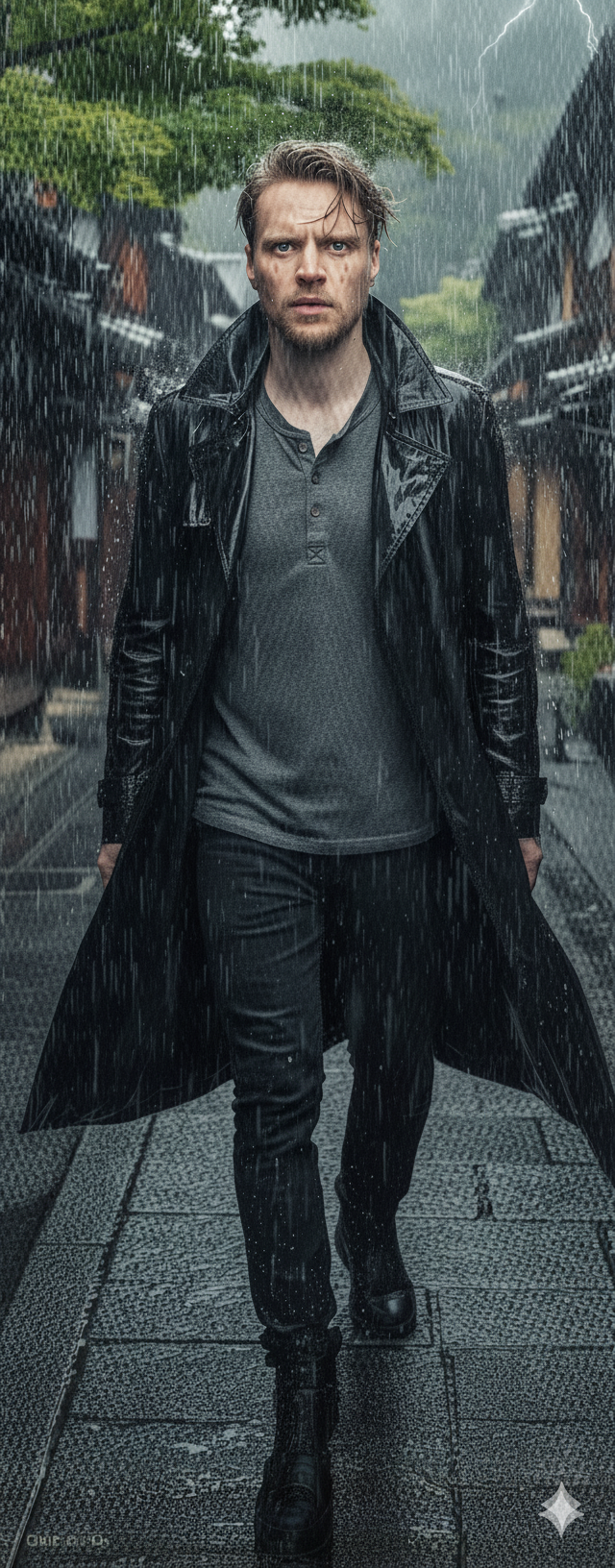

Visual Examples: Natural Photography

Park scene tested at different protection strengths:

Strength 1.5 ✅ (Recommended)

SSIM: 0.985 | Mid-band: 81% | Training Slowdown: 2.0x

Virtually invisible with strong ML disruption. Ideal for social media, portfolios, and public sharing.

Strength 6.2 ⚠️

SSIM: 0.739 | Mid-band: 91% | Training Slowdown: 3.4x

Maximum ML disruption (343% slower training) with visible quality trade-off. Best for archival protection and legal evidence.

AI Generative Model Tests

When AI generators attempt to recreate protected images, the armor causes visible artifacts:

Park (Strength 1.5) → AI Reconstruction

Line artifacts and streaking patterns show AI disruption despite minimal visual changes to original.

Park (Strength 6.2) → AI Reconstruction

Severe artifacts and noise demonstrate maximum ML training disruption.

Visual Examples: AI-Generated Content

Digital artwork (walking scene) shows different frequency characteristics but still disrupts AI models:

Strength 1.5 ✅

SSIM: 0.995 | Mid-band: 35% (high-band dominant)

Excellent visual quality. AI-generated content has different frequency profile but still disrupts models.

Strength 6.2

SSIM: 0.827 | Mid-band: 65% | Robustness: 77%

Higher strength achieves best robustness across all tests (77% survival through aggressive transforms).

Walking (Strength 1.5) → AI Reconstruction

Severe distortions: diagonal artifacts, color aberrations, structural errors throughout.

Walking (Strength 6.2) → AI Reconstruction

Consistent severe artifacts confirm mid-band concentration is the primary driver of AI disruption.

Watermark Removal Resistance

Protected images tested against commercial AI-powered watermark removal tools:

100% Shield Preservation

0 out of 4 test images had protection removed

Traditional watermark removers detect surface patterns. Ghostprint protection is embedded in the frequency-domain structure—it appears as natural image content.

⚠️ Honest Assessment: The Goal is Economic Disruption, Not Perfect Unbreakability

Recent research (LightShed, USENIX 2025) demonstrated autoencoder-based attacks that can learn to remove protection patterns when trained on large, paired clean/armored image datasets. When an attacker has access to both original and protected versions of many images, protection algorithms like Nightshade, Glaze, Metacheck, Ghostprint, etc can be removed. No current solution exists that is able to overcome this autoencoder approach unfortunately.

The Economic Hurdle Strategy: The primary goal of Ghostprint is to make unauthorized AI training prohibitively expensive and time-consuming. We achieve this in two ways:

- Cost of Removal: To train a removal model, attackers must acquire thousands of paired (clean, protected) images. This forces them to either license/purchase original content from creators or use our service to generate armored versions—both creating significant financial and logistical barriers.

- Cost of Training: If attackers choose to train on poisoned images, the 2-3.4x training degradation means they must spend significantly more on compute resources (time and money) to achieve their desired results. This directly impacts their bottom line.

Primary Value: The core strength of Ghostprint lies in creating a powerful economic disincentive against unauthorized data scraping, forcing model creators to either pay for clean data or pay more for training on poisoned data. It is not designed to be an unbreakable shield against a determined adversary with unlimited resources and paired training data.

Future Direction: Active research is underway to counter this autoencoder vulnerability. We are confident that this is a solvable problem and are committed to developing next-generation defenses that enhance removal resistance without compromising visual quality.

What Makes Ghostprint Different

🎯 Frequency-Domain Targeting

Protection embedded in the mathematical structure of images, targeting the frequencies (0.10-0.40 normalized radius) that ML models rely on for training. Disrupts neural network convergence while maintaining visual quality.

🔐 Cryptographic Provenance

Every protected image receives a comprehensive metadata stamp containing creator identity, SHA-256 hashes of original and protected versions, timestamps, AI training prohibition notices, and protection performance metrics. This creates an immutable record for legal verification and tamper detection.

🛡️ Transform-Resistant

Not a surface watermark—protection is woven into the frequency-domain structure. Survives JPEG compression (Q75-95), resizing (0.75x-1.25x), blur, and format conversion with 57-71% armor retention across transforms.

👁️ Minimal Visual Impact

At optimal settings (strength 3.0), visual quality remains excellent (SSIM 0.85+) while ML disruption stays effective (20-343%).

🤖 ML Training Poisoning

Disrupts neural network training itself—not just feature extraction. Achieves 343% training degradation, among the highest for low-distortion attacks.

⚙️ Adjustable Protection

Fine-tune the strength-quality trade-off based on your needs: subtle for sharing, aggressive for legal protection.

How We Compare to Other Methods

| Method | Primary Goal | Mechanism | Key Differentiator |

|---|---|---|---|

| Ghostprint | Disrupt ML training convergence; make training economically unviable. | Targets frequencies to fundamentally disrupt feature extraction and gradient learning. Combines this with cryptographic provenance. | Targets the training process itself, not just the output. Verifiable via cryptographic metadata. |

| Glaze | Prevent style mimicry by AI models. | Adds perturbations that mislead models about an artist's specific style, making it difficult to replicate. | Defensive tool focused on protecting artistic style. |

| Nightshade | "Poison" the model's understanding of concepts. | A data poisoning attack that manipulates training data to teach the model incorrect associations (e.g., "dogs" are "cats"). | Offensive tool that corrupts specific concepts within the model. |

| Metadata/Watermarking | Add copyright info or visible marks. | Embeds text in file metadata (EXIF) or places a visible/invisible overlay on the image. | Easily stripped by social media platforms and basic image editing. Offers no protection against training. |

Get Involved

Seeking Partners & Backing

Ghostprint has proven its effectiveness through rigorous validation. We're now seeking partners, funding, and strategic collaborators to scale this technology and combat AI content theft at an enterprise level.

We're Looking For:

🏢 Enterprise Organizations

Companies seeking robust solutions to combat AI theft of proprietary content, training data, or creative assets. Adobe, Getty, Shutterstock, and similar platforms actively evaluating protection technologies.

💰 Funding Partners

Investment to scale the core algorithms and expand protection capabilities beyond static images to video, audio, 3D models, and other media types. Funding supports algorithm R&D, enterprise API development, and team growth.

🔬 Research Collaborators

Academic institutions and AI ethics researchers studying digital rights, adversarial ML, and content protection. We're open to collaborative research and joint publication opportunities.

🛠️ Integration Partners

Platform providers, creative tools, DAM systems, and content management solutions seeking to embed Ghostprint protection. We're building an enterprise-grade API for seamless integration.

Expansion Roadmap

With proper backing, Ghostprint can expand beyond images:

- Video Protection: Frame-coherent armor for film, TV, and social media

- Audio Protection: Frequency-domain poisoning for music and podcasts

- 3D Assets: Protection for models, textures, and virtual environments

- Document Protection: Text-based content for articles, books, and code

- Enterprise API: Production-grade REST API with batch processing and analytics

- SaaS Platform: Web application with team management and usage tracking

Contact

General Inquiries: entronautengine@gmail.com

Partnership & Investment: beckettdillon42@gmail.com

Entronaut Engine: www.entronaut.com

Current Stage: Proven technology with validated results (48% ML training disruption, 91% mid-band concentration, 100% watermark removal resistance, 71% robustness). Seeking Series A funding and enterprise partnerships to scale from prototype to production-grade platform.